在使用Spark执行任务时,如果是同时提交多个任务,然后通过端口18080查看任务的状态会发现有的任务的状态为waiting状态,控制台提示:Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory,具体执行控制台打印的信息如下:

16/06/17 09:20:42 INFO DAGScheduler: Submitting 8 missing tasks from Stage 0 (FlatMappedRDD[3] at flatMapToPair at BosBuildSolutionETL.java:67)

16/06/17 09:20:42 INFO TaskSchedulerImpl: Adding task set 0.0 with 8 tasks

16/06/17 09:20:57 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:21:12 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:21:27 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:21:42 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:21:57 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:22:12 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:22:27 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:22:42 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:22:57 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:23:12 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:23:27 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

16/06/17 09:23:42 WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

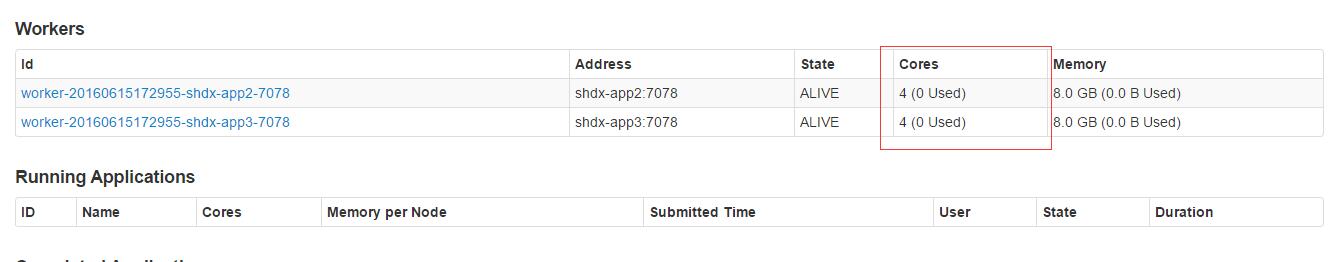

其实,从日志可以看出是任务执行时需要的资源不够了,很可能的原因是我们没有为每一个应用提供任务执行所需的足够资源,比如我这里是同时提交了两个任务,从18080可以看到任务的执行状态如下图所示:

1)没有任务执行时的开始状态

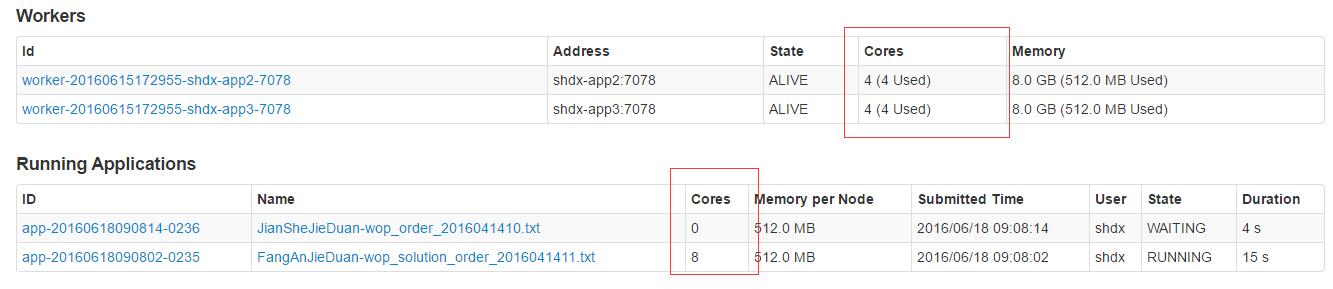

2)两个任务同时执行时的状态

出现上述状态的原因是spark默认对每一个应用可以分配的spark.cores.max是不限制的(参考这里),因此当第一个应用运行时已经把所有cpu分配给第一个application了,第二个application没法获得任务执行的资源了。解决办法是为spark任务合理的分配资源,比如我的环境的两个work节点可以利用的cores一共为8,然后我通过代码为两个spark任务分别制定可以利用的最大core数量,代码如下:

//其中一个任务core指定为6

SparkConf sparkConf = new SparkConf().setAppName("FangAnJieDuan-"+fileName).set("spark.cores.max","6")

//另一个指定为2

SparkConf sparkConf = new SparkConf().setAppName("JianSheJieDuan-"+fileName).set("spark.cores.max","2");

这样两个spark任务就可以同时运行了,如下图:

所以,如果任务出现waiting状态,查看控制台信息,很可能的原因就是资源不够了,我们需要根据信息合理的分配资源就可以了。

最新评论

没有飙车和大战场景呀

完全没有。。。。

没有磁力吗

不行,这个版本4K还是限速~